Kubernetes is an open-source tool available for managing the different containers. Kubernetes helps with the automation work in containers such as the deployment of containers, scaling those containers, and descaling the containers. Also if you want to distribute the load then Kubernetes provides the functionality to apply the load balancer as well.

1. History of Kubernetes

Kubernetes(k8s) is a Greek word meaning “Helmsman (the person who steers a ship)”. Google officially released Kubernetes in June-2014 as a successor of Borg. Borg is also a container manager tool provided by Google. Google donated Kubernetes to Cloud Native Computing Foundation(CNCF) in the same year 2014.

The Kubernetes project is written in the Go programming language, and because it is an open-source tool the source code is available on GitHub.

2. Feature of Kubernetes

Taking an example of the legacy app which is having 1000 of the containers. It will be really tough to manage those containers and scale them. In such a case, we can use KUBERNATES which not only manages the containers but also helps them to scale up.

For Instance, Let’s say we are ordering a package from an e-commerce website. We just have to make an order with the details and manifesto. The respective company does the rest of the things like packaging and logistics. In the same way, k8s work we just have to give our app with the details and manifesto and rest just taken care of by the Kubernetes

The above use-case is the most common in the current industry and at current time Kubernetes is helping to automate the maintenance of the multiple containers.

Kubernetes makes sure to provide high availability to our applications by auto-restarting the failed containers. let us discuss some of the main features of Kubernetes in brief:

- Self-Healing capabilities to provide availability.

- Rollback capabilities in the applications.

- DNS management infrastructure.

- Auto-scalable infrastructure.

- Horizontal Scaling & Load Balancing.

- Application-centric management of containers.

- Ability to create a predictable infrastructure as per the requirement.

- Real-time health monitoring.

3. The architecture of Kubernetes.

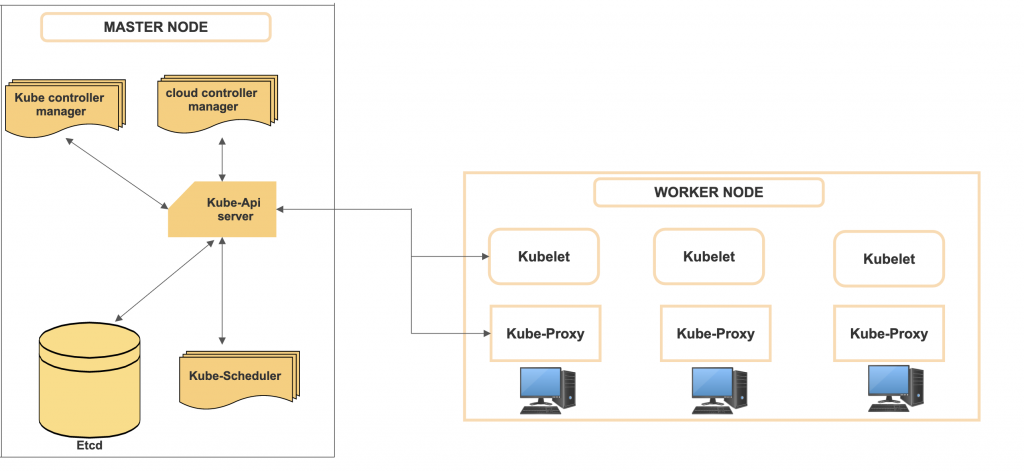

Kubernetes follows the master-slave architecture. Master nodes and multiple worker nodes compose each cluster. In other words, we have mainly three components in Kubernetes as follow:

- Master nodes

- Worker nodes

3.1. Master node

A master node is basically a collection of the component which acts as the control panel to manage the containers. The decision related to the clusters is made here. Whenever the admin sends a command to the system via GUI, API, or CLI then it talks to the Master node.

We can install multiple master nodes to achieve the high availability, In this case, there will be one master leader which will execute all the operations and the rest of the master node will act as a follower of that leader master. Master components can run on any node.

There are some of the key components available in the master node that we should not forget to discuss here :

3.1.1. API Server

The API server is the central management entity and is responsible to execute all the admin related tasks on the master node. When a user sends the command, it goes to the API server. This validates the request, processes it further, and finally executes the command. It also persists it to etcd.

The core functionality of the API server are as follow:

- The API server is the only Kubernetes component that connects with the etcd, all the other components must communicate with the API Server.

- The API Server also deals with the authentication and authorization mechanism of Kubernetes. As per the standard process, all the API clients should first be authenticated and authorized to interact with the API Server.

3.1.2. Etcd

Etcd is a strong and highly available store that Kubernetes uses for the persistent storage of all API objects. The state of the cluster is stored by this. Kubernetes uses etcd as a key-value database store, which stores the configuration of the Kubernetes cluster.

It is also used to store the configuration details such as the subnets and the configuration maps. It may be the part of the Master or we can set it up manually. This works on the concept of the Raft consensus algorithm.

3.1.3. Controller Manager

The controller manager is also known as the Kube-controller manager, which is responsible to collect and send the pieces of information to the API server.

A controller watches the current shared state of the cluster through the API server and makes changes attempting to move the current state towards the desired state. Some of the examples of controllers are Node controller, Service Account, Token Controllers, Endpoints Controller, and Replication Controller.

The controller manager also performs changes in the functions like namespace creations and different types of garbage collections.

3.1.4. Cloud Controller Manager

This is the new component that was introduced as Beta in 1.15 specific for the cloud-centric clusters. When the cluster of Kubernetes is running on the cloud. Cloud Controller Manager helps to get information like the number of nodes running in the cluster, configuring the load-balancers, and many more.

3.1.5. Scheduler

As the name defines scheduler schedules workload to different available worker nodes. It checks for the newly created pods and allocates them to the nodes via the binding pod API based on several parameters like data locality, service requirements, available requested resources, and many more.

3.2. Worker Nodes

The worker nodes may be a physical or virtual server in which applications are deployed and directly get managed by the master node. They provide the Kubernetes runtime environment.

Worker nodes consist of all the pods which are basically the collection of the containers. Worker nodes are directly accessible by the real world.

3.2.1. Kubelet

Kubelet is one of the lowest level components available in Kubernetes. It acts as a supervisor of all the processes running in the clusters which confirms that what is running on the individual machines and if they are running or not.

Kubelet is available on each node in the cluster. Please note that kubelet doesn’t manage those containers which are created externally and not by the Kubernetes

3.2.2. Kube-proxy

Kube-proxy runs on each of the worker nodes as a network proxy implementing the way to expose an application running on a set of pods as network service. This serves and listens to the API server for the creation and deletion of the service points.

Conclusion:

I have given you a thousand feet introduction of Kubernetes (K8), in the upcoming articles we will discuss the Kubernetes in depth.